Our story begins a very long time ago, somewhere between 11 and 16 million years ago, an era better known as the Middle Miocene epoch. Dogs and bears made their first appearances. The evolution of horses was galloping along over the landmass that became North America. In the western interior of North America, a great outpouring of lava formed the Columbia River basalt plateau, which became part of the American northwest landmass. On the other side of the planet, the Alps continued their ascent. In the midst of all this geophysical tumult, another critical event was taking shape: the enzyme uricase began to disappear in hominids.

There appears to have been three critical mutations in the uricase gene in humans, chimpanzees, and gorillas that led to the demise of the enzyme.1 Two were nonsense mutations, involving codons 33 and 187, and the third was a mutation in the splice acceptor signal of exon 3. The mutation of codon 33 is thought to be responsible for the major loss of uricase function. The promoter region of the gene had probably been degraded in the evolutionary process by previous mutations and, over time, humans and some other higher primates lost all uricase activity. Perhaps this seminal event should be considered to be the “Big Bang” in the rheumatology universe. After all, without it there would be no podagra, hot swollen ankles, or sore insteps. I suspect that most rheumatology consult services would have withered with the loss of such a sizable portion of their clinical revenue!

Gout is a wonderful disease to study. Its superficial simplicity (molecular formula of C5H4N4O3) belies its complicated role in human biology. Despite our greater understanding of the pathogenesis of gout, many patients are misdiagnosed or treated inappropriately. Acute pain, redness, and swelling in a lower extremity joint, a raised serum urate, and voila, the diagnosis is made. Really? Who needs a polarizing microscope? Certainly not the majority of practitioners who diagnose gout. In the U.S., it is estimated that rheumatologists perform fewer than 2% of all office visits for gout, compared with primary care doctors who see 70% and cardiologists who manage 10% of gout sufferers. I suspect that allopurinol is one of the most widely misunderstood drugs prescribed by nonrheumatologists. Perhaps each prescription should contain a package insert advising the patient to confirm its use with a rheumatologist.

Did Lead Poisoning Contribute to the Fall of the Roman Empire?

It has been speculated that gout may have indirectly led to the demise of the Roman Empire. Let’s take a closer look. Although evidence of uric acid deposition in joints has been described in mummified Egyptian remains dating back 4,000 years, Hippocrates is considered to be the first person to accurately describe its clinical features. He recognized a pathogenic role for rich foods and wines and noted that its onset occurred following puberty in men and after menopause in women. We should also take him at his word that eunuchs were hardly ever affected by it. He believed in the value of diet therapy and purgatives, and opined that, “the best natural relief of this disease is an attack of dysentery.” This was the rationale for using meadow saffron, Colchicum autumnale, as a treatment for acute gout. This plant originated in the kingdom of Colchis, situated on the coast of the Black Sea, and became renowned for its use as either a laxative or a poison. The latter use was chronicled in Greek mythology. Medea, daughter of the king of Colchis and wife of Jason (of the Argonauts), used it to kill her children upon being betrayed by her husband.2

So why was gout rampant among the Romans? Well, their wine consumption is legendary, with an average intake estimated to be in the range of one to five liters per person per day. With these statistics, it is hard to see how they accomplished anything! Yet this particular risk factor for gout was overshadowed by another more ominous one—the presence of lead in most Roman cooking utensils.

Lead specifically inhibits the tubular secretion of uric acid and it impairs the enzyme guanine aminohydrolase, resulting in an accretion of the insoluble purine guanine. Scholars believe that the most significant contamination of Roman wines stemmed from the widespread use of boiled-down grape syrup to enhance its color, sweetness, bouquet, and preservation.3 Recipes were clear about the need to simmer the flavors slowly in a lead pot or lead-lined copper kettle, in order to avoid admixing the harsh taste of copper rust. When some of these ancient recipes have been recreated, the lead concentration measured in the range of 240 to 1,000 milligrams per liter, which is several thousand times greater than our current daily lead intake. Ingesting just one teaspoon of this vinous potion would be sufficient to induce chronic lead poisoning. Salute!

An examination of the skeletal remains dating back to the Roman era supports the concept that saturnine gout was pandemic among the aristocrats of the Roman Empire.

(For unknown reasons, medieval alchemists named this base metal after the Titan Saturn—perhaps because lead seemed to devour all others, much as the old god ate his own children. Hence the term, saturnine gout.) It caused a painful and debilitating form of arthritis. The Stoic philosopher Gaius Musonius Rufus perceptively noted the health hazards of gout and plumbism:

“That masters are less strong, less healthy, less able to endure labor than servants; countrymen more strong than those who are bred in the city, those that feed meanly than those who feed daintily; and that, generally, the latter live longer than the former. Nor are there any other persons more troubled with gouts, dropsies, colics, and the like, than those who, condemning simple diet, live upon prepared dainties.”3

History Repeats Itself

Roman society may not have been the only community to suffer the ravages of saturnine gout.2 In the late 1600s, the British Parliament sought to limit commercial competition by the Dutch fleet by banning the importation of French wines, a cargo not carried by the British, in favor of Spanish and Portuguese wines. Port was particularly popular and it happened to be rich in lead. The consumption of port in England tended to parallel the incidence of gout, and both peaked in the 18th and 19th centuries. In contrast, the incidence of gout was quite rare in other northern European countries where alcoholic beverages other than port were favored by all social classes. Gout came to symbolize the leisured class, whose members brought grief upon themselves through their excesses. Gout was perceived as being socially desirable because of its prevalence among the politically and socially powerful. A quip in the Times of London in 1900 echoed this view: “The common cold is well named—but the gout seems instantly to raise the patient’s social status.”4 The American satirist Ambrose Bierce, who resided in England during the 1870s, mocked that, “gout was a physician’s name for the rheumatism of a rich patient.” The literature of this era was rife with satirical caricatures of gouty aristocrats and merchants.

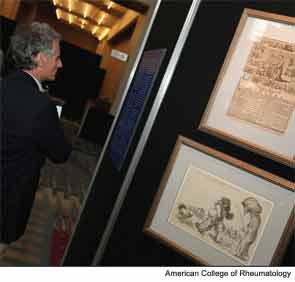

Perhaps the greatest collection of these images was amassed by our late colleague, Gerald P. Rodnan, MD (1927–1983). Dr. Rodnan spent most of his career with the department of medicine at the University of Pittsburgh School of Medicine. In 1956, he was appointed chief of the newly formed division of rheumatology and clinical immunology. He rapidly became noted as a teacher and clinical investigator and rose to the rank of professor of medicine in 1967. Dr. Rodnan published extensively and served as editor of several publications, including a major textbook of rheumatology that I fondly recall using to study for the rheumatology board examination. He was president of the American Rheumatism Association (now the ACR) from 1975 to 1976. Following his death, his estate graciously gave the Rheumatology Research Foundation permission to reproduce a limited number of prints from his collection of antique gout artwork.5 Each year, the Foundation releases a new print in honor of the ACR/ARHP Annual Meeting, where it is displayed along with previous years’ selections.

This year, a highly insightful commentary on the history of these lithographs accompanied the magnificent display in Washington, D.C. Written by Melvin C. Britton, MD, a distinguished master member of the ACR from Atherton, Calif., it describes the transition of gout from being regarded as an infliction that was unique to members of a bloated aristocracy to the spread of its pain and misery to the masses. This viewpoint was echoed by this witty remark in Punch magazine: “It is ridiculous that a man should be barred from enjoying gout because he went to the wrong school.”4 As Dr. Britton points out, these lithographs provided viewers with a biting commentary on the political currents of the era, spanning the late 18th century up to the early Victorian years.6 They reflected the intense political debates within and around the British Empire. The world had been turned upside down by the American Revolution. In France, the turmoil of their revolution, which spawned the countless beheadings of members of the monarchy and aristocracy, was followed by Napoleon’s megalomania. It must have been refreshing for the citizenry to poke fun at the ruling class without the risk of inflicting their wrath.

An American Link: Gout

The effect of gout on the British ruling class may have also influenced American political history.4 The British statesman and leader William Pitt the Elder suffered from gout. During one of Pitt’s gout-related absences from Parliament, the Stamp Act (1765) was passed, which forced the unwilling colonists to pay a tax to defray the costs of defending the colonies against French attack. Following his recovering from gout, Pitt succeeded in getting the act repealed with the famous words, “The Americans are the sons, not the bastards, of England. As subjects, they are entitled to the right of common representation and cannot be bound to pay taxes without their consent.” Unfortunately, during another of Pitt’s absences due to an episode of gout, Lord Townshend persuaded Parliament to levy a heavy duty on colonial imports of tea to raise the necessary revenues. This precipitated the Boston Tea Party (1773), and we all know the rest of this story! By January 1788, five of the nine required states had ratified the Constitution. One notable holdout was Massachusetts, whose governor, revolutionary leader John Hancock, was unable to make up his mind on the Constitution and took to his bed with what was seen at the time as a convenient case of gout. Later, after being tempted by the Federalists with the vice presidency, Hancock experienced a miraculous cure and delivered his critical block of votes.

Was There an Evolutionary Advantage To Losing Uricase?

Uric acid is a powerful radical scavenger and a chelator of metal ions, accounting for greater than 50% of the antioxidant activity present in human biological fluids. Thus, it was assumed that hyperuricemia would be associated with an increased antioxidant capacity and a greater life expectancy for the affected individual. In fact, the reverse holds true: hyperuricemia is a marker for worsening renal function, heart disease, and the metabolic syndrome. (For more information on this subject please see “Gout Complicates Comorbid Conditions”)

So we are left with the paradox: Why was uricase function lost? The evolutionary benefits of losing uricase function and the corresponding rise in serum urate have generated many hypotheses. Some of the early theories suggested that raised serum urate levels may have spurred an evolutionary leap in the intellectual capacity of hominids.7 This theory was based on the finding that uric acid and brain stimulants such as caffeine share structural homologies. Over the years, a number of studies emerged, claiming that mean higher intelligence scores based on IQ testing were noted in patients with gout. In fact, a study of the male faculty at the University of Michigan (such a highly accomplished and intelligent cohort), found that their mean serum urate concentrations were statistically greater than controls (no, they were not Ohio Buckeyes). Several subsequent larger studies failed to find the link between serum uric acid levels and intelligence.

A final blow to the theory linking urate to intelligence came after a careful study of the evolutionary time period when accelerated brain development occurred in hominids. The large increase in cerebral volume of hominid brains probably occurred 10 million years following the loss of the uricase enzyme. Thus, it is highly unlikely that these two events were linked.

Yet there still may be a gout–brain association. For example, gout has never been reported in patients with multiple sclerosis. Additionally, the very low incidence of other neurologic diseases, such as Parkinson’s disease, Alzheimer’s disease, and amyotrophic lateral sclerosis, in patients with gout adds some credence to the theory that elevated urate levels may confer some form of a neuroprotective effect.

There is a third hypothesis. The mutations affecting the uricase enzyme occurred at a time when hominids were woodland quadrupeds that inhabited subtropical forests and survived on a diet consisting mainly of fruit and leaves. The salt content of the diet was extremely low; some estimates place it at a paltry 200 mg per day, a far cry from today’s average consumption of 4,000 mg daily. During times of low salt ingestion, increasing serum urate levels may have served to maintain an adequate blood pressure acutely through stimulation of the renin–angiotensin pathway and chronically by inducing sensitivity to salt through the development of microvascular and interstitial renal disease. Thus, having an elevated serum urate would confer upon the host the evolutionary advantage of being able to walk upright.

Gout in the 21st Century

Towards the end of the last century, a major breakthrough in our understanding of the innate immune system was achieved by the discovery that uric acid crystals could trigger interleukin 1β–mediated inflammation via activation of the NOD-like receptor protein (NLRP3) inflammasome, a multimolecular complex whose activation appears to be central to many pathological inflammatory conditions. But what is uric acid doing in this neighborhood frequented by microbes and other dangerous characters? Why is it serving as a danger signal? Herein lies the last, great riddle of gout that hopefully will be solved sometime soon. In the meantime, shouldn’t the finding of hyperuricemia be enough of a danger signal to alert patients to modify their lifestyles? It may be helpful to have some visual aids to assist you in this task. Consider purchasing a couple of Rodnan prints from the Foundation to cover your spare office walls. They are eye catching and witty—and your gout patients will get the message!

Dr. Helfgott is physician editor of The Rheumatologist and associate professor of medicine in the division of rheumatology, immunology, and allergy at Harvard Medical School in Boston.

References

- Wu X, Muzny DM, Lee CC, Caskey CT. Two independent mutational events in the loss of urate oxidase during hominoid evolution. J Mol Evol. 1992;34:78-84.

- Bhattacharjee,S. A brief history of gout. Int J Rheum Dis. 2009;12:61-63.

- Nriagu, J. Saturnine gout among Roman aristocrats. N Engl J Med. 1983;308:660-663.

- Nuki, G and Simkin, PA. A concise history of gout and hyperuricemia and their treatment. Arthritis Res Ther. 2006; 8(Suppl 1):S1.

- The Rodnan Print Collection of the Rheumatology Research Foundation www.rheumatology.org/founda tion/posters/index.asp.

- Rodnan GP. A gallery of gout. Arthritis Rheum. 1961;4:176-194.

- Alvarez-Lario B, Macarron-Vicente J. Uric acid and evolution. Rheumatology. 2010;49:2010-2015.